Where do you draw the line with AI?

I’ve been reading a lot about AI. There are a few feeds I subscribe to that have become mostly AI related. Things like “how AI helped us do X process 1000x faster” to “how AI is ruining everything” to “actually AI is OK for certain things” and so on. There’s many viewpoints on the matter, but most people feel strongly about their viewpoint. Either it’s really great and it’s the future, or it’s disgusting and will only make things worse.

Skip to what I’m seeing, what I think, itch.io consensus, conclusion.

Let’s start at the beginning

A few of my friends and I decided it would be fun to make a short game. We have been thinking about participating in game jams for a while, but our schedules have never aligned and the themes have ended up not being interesting to us. Eventually, I decided we should make the game in the spirit of a game jam, but without being part of an actual game jam. The goal: make something… anything at all in a three month period and publish whatever gets made to itch.io (probably for free) after the time is up.

The “team” — if you want to call it that — is not a group of experienced game devs. These are people who have dabbled in various aspects of game development: 3D modeling, music composition, writing and worldbuilding (me), programming, etc. However, there isn’t an actual artist in this group of friends. I know personally know a few artists, but being a friend with an artist doesn’t really mean anything. That’s not because they don’t think it’s a good idea, but rather because doing work for a game is a time commitment.

That being said, anyone can learn to draw! Someone on the team can pick up the lacking art talent, right? There are tons of resources out there, with the one I’m most familiar with being Drawabox. That’s very cool, but I already have a three month time limit set in stone. Why not learn drawing first and come back later? This has happened in the past before; we’ve gone and learned a new game engine or a new framework or writing style or whatever else and we spend more time learning about this side thing than actually making the game. My opinion is we should make something with what skills we have now and later come back and improve the things that didn’t work.

The game

The type of game: a visual novel. This sounds like a terrible idea. After all, aren’t visual novels primarily about… the visuals?

Well yes, but if you do it right, you can save yourself a lot of time compared to other genres:

- Has visuals but no real animations, just a few still images you can cycle through

- No 3D modeling

- No complex programming

- Mostly text based, which only requires a lot of writing effort (that should be easy in this case)

In addition, I’ve made it easier by deciding the game will use retro graphics. Pixel art and limited color palettes, while adding a certain style to the game, also makes it easier to look close to perfect (compared to full quality graphics).

Making of Graphics

So you want to make graphics for a visual novel. There are two important aspects you need to cover:

- Backgrounds

- Character sprites

There are also small things like UI design, but they are not necessarily integral to playing the game, so we’ll ignore them for now. They are important but not the most important.

Backgrounds

It’s hard to say which is the “harder” one to do because it depends on the scenario, but in this case, backgrounds are the harder one to draw.

The idea I’ve seen many throw around (including real artists who work on anime) is reference image tracing. Tracing doesn’t always mean you trace directly over the source image, but generally it means you are trying to recreate a photographed image with your art style. While not starting from scratch, it’s still a ton of work. Images often have many, many details in them that have to be accounted for. While you get things like perspective and random object clutter for free, you may want to adjust how something looks (it is art after all!), change out geography, objects, landmarks, etc. to better fit what you’re trying to draw for. I recently found out that there’s a term in Japanese, seichi junrei, which is when fans visit the original locations that appear in anime (and possibly other forms of media).

You can also draw backgrounds without any reference except your past experiences seing these locations in the past. I’ve seen videos of people drawing a rough sketch of what they think something should look like (using perspective lines or even 3D modeling software) and then doing a really detailed image from that. There are all kinds of options.

Of course, people long ago have drawn really intricate backgrounds before without any other help, but the main thing we’re trying to optimize here is time vs quality. We want the background to look decent without spending too much time on it, because there are many backgrounds and only so much time to do each one.

Another option, especially for indies, is to use royalty free images and run a bunch of filters on them so it doesn’t look too much like a stock photo. This will be the route we’ll most likely go down, but using stock photos can often limit you to certain real life locations that have been captured by stock photos.

Characters

This one isn’t actually too bad. With the pixel art-styled graphics, character sprites are pretty simple and don’t require too many variants to feel fresh. We’ll talk more about reference in the next section, but I have found it possible to draw some (not amazing, but decent) characters without reference. However, on the subject of reference…

Reference

I don’t have a particular art style (because I don’t usually draw), so there’s no “drawing characters the way I usually do.” In order to better get an idea of how characters should look, I decided to go shopping for reference, for lack of a better term. This means going to art sites, searching for roughly the style I want, and trying to gather inspiration for what the style should (and should not) look like. This works very well when the theme is popular and well-defined, and not so well when the theme is not. For example, if you search “cowboy” on DeviantArt you can very easily find pictures of cowboys. Sometimes you’ll find pictures of the stereotypical version of something rather than what something looks like in real life, but sometimes that’s what you want.

So sure, you can look at DeviantArt, Tumblr, Pixiv, heck, even the websites that AI are training on like Danbooru. But like the backgrounds, you’ll only get images that actually exist. And that’s probably fine! If you were doing this before the world of AI, this would be your only option.

A new challenger appears…

When tools like Stable Diffusion came out, I was pretty interested. I was running it on a pretty garbage GPU that took multiple minutes for one image. But what was interesting about it to me was being able to draw extremely silly images and turn them into more realistic-looking ones (with img2img). My goal in making these images wasn’t for anything other than my own amusement. It’s actually not very good at making art! It’s like playing the game 20 Questions: you already know the answer, you’re just curious if the game can figure out what you’re thinking.

So yes, I used Stable Diffusion for a little bit, even made a few concept images for some projects I was working at the time to show others what I was thinking, and then forgot about it for a while.

Fast-forward to recently, when I thought it might be interesting to have AI draw some concept art for the game. I have a general idea of what the game should look like, but I’m not sure exactly what the style should look like. So why not hit the randomizer a few times and see what it comes up with?

As I expected, the results are… not great. Local Stable Diffusion models are never going to be as good as the commercial ones running in big data centers, but the images it spits out are not very good at all. It took about 50 images across multiple random models I had downloaded to get anything worth reasonable. And this was just for a placeholder background for testing!

As for characters, it constantly ignores your instructions and goes of doing whatever it feels like doing instead. Asking for one property makes it apply the property everywhere in the image, not just in one place. Sure, the overall quality can be decent (if you ignore fingers), but what you get is obviously not what was asked for.

In a few cases, SD bugged out and produced an obviously glitchy image, which turned out to be way better than anything else I’d seen before. I decided to recreate the image myself in pixel art and showed it to a few friends who generally liked the style. I wondered, if I recreate this glitchy image, am I using AI art?

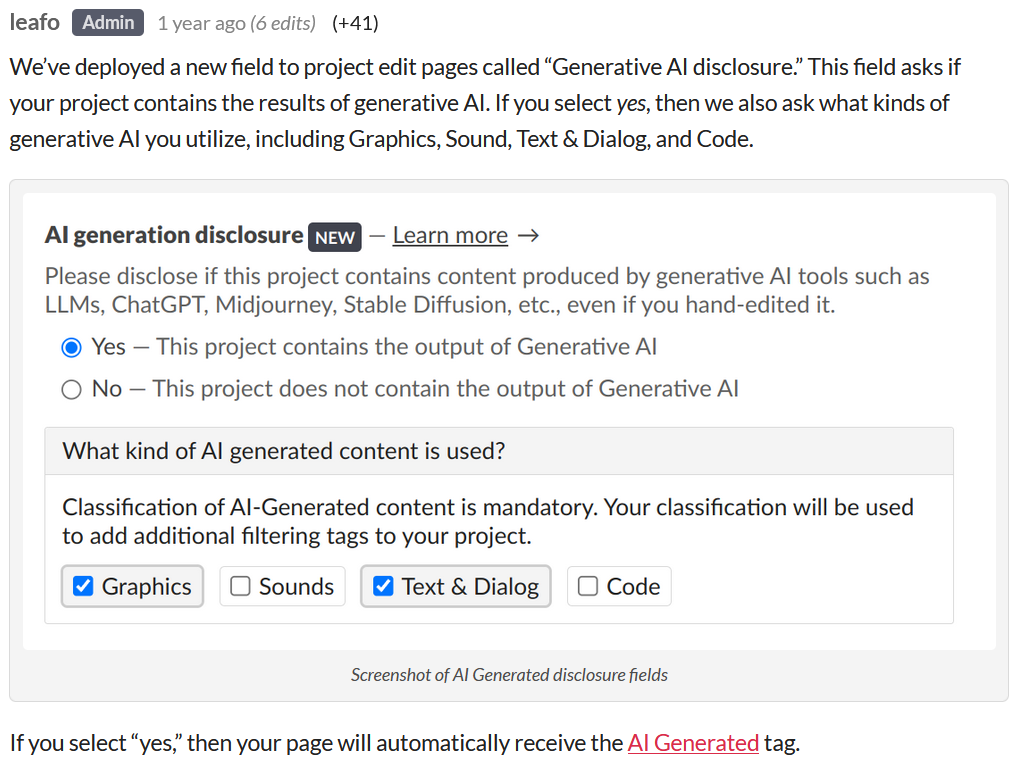

itch.io and the AI Disclosure Policy

As mentioned before, we planned to release this game on itch.io. I remembered something about AI disclosure recently, so I looked into it more.

Let’s look at the specific wording used (this is basically the only clarification we get).

Please disclose if this project contains content produced by generative AI tools such as LLMs, ChatGPT, Midjourney, Stable Diffusion, etc., even if you hand-edited it.

This sounds pretty clear at first, but on further thought, it’s still not clear where the line is drawn. What is considered “hand-editing”? Does it just mean cleaning up directly AI-produced images, or does making an image based on AI-produced images also count?

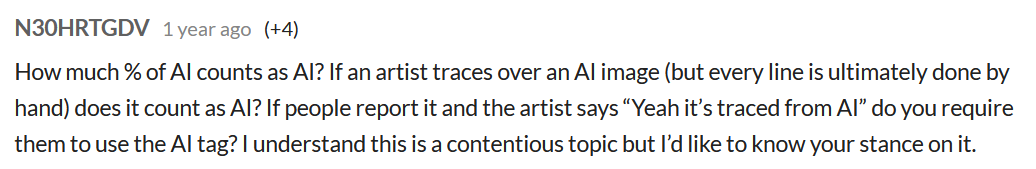

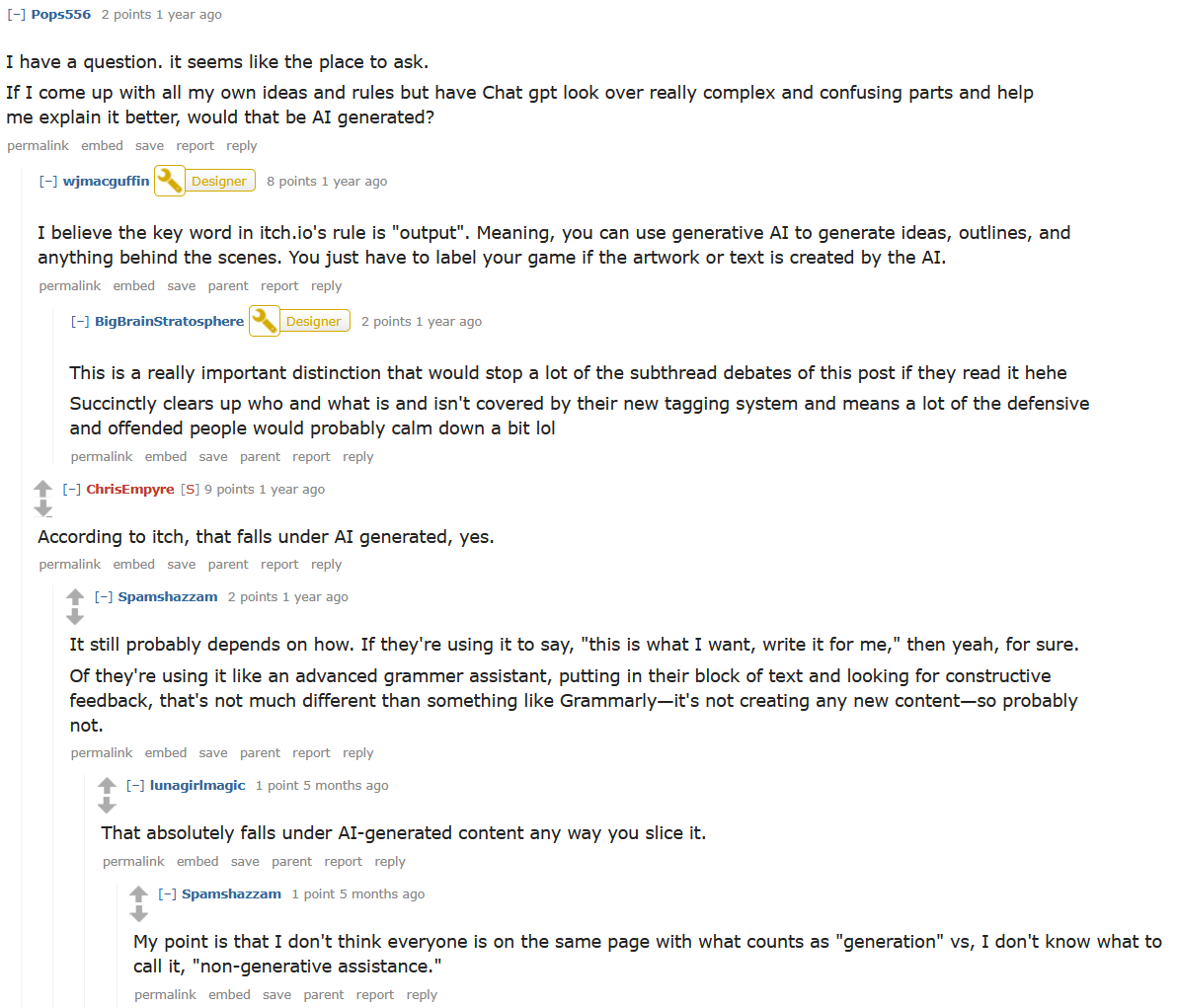

Well, no surprise, but someone already asked that in the same thread.

There is no answer from OP, but one of the commenters argues that “any percentage of AI should be classified as AI.” Should it be? As another commenter asked, should 5% of AI be tagged the same as 95% of AI?

What I’m seeing online

I would be lying if I said I didn’t notice any change in my search results in the past five years. It’s gotten pretty unbearable. I often look things up online only to be hit with five or six LLM-written sites. Often times you can recognize them with long URLs like thewebsite.com/10-ways-to-fix-your-slow-pc/, but sometimes it’s harder to tell and you’ll only notice halfway through the article that everything it’s saying is gibberish. More often than not, I’m seeing blog posts (even from real humans) using unedited AI-generated images as the cover images. And in a world where we already have tons of misinformation, search engine AI results, AI bots on social media, and normal LLMs themselves are spreading tons of misinformation, probably faster than ever before.

This is what I call a bad usage of AI. AI should not be used as the source of truth for anything. It just happens to sometimes spit out somewhat correct answers from time to time. And now we’re taking these answers, sometimes stripping away the fact that AI made it, and presenting it with the assumption that it’s real, authentic, and correct. It’s a trend that’s only getting larger and larger, but not one I’m on board with.

Before we get to my opinions, here are the arguments I’ve seen online for and against generative AI.

The arguments (for)

Allows non-coders to make code (vibe coding)

For those who can’t code at all, you can ask AI to write entire projects for you! As long as it works, what’s the problem? The code may be bad, but you can keep asking AI to fix it until it works.

Similarly, allowing non-artists to make art

AI art generators allow people to make images that may not have been able to draw themselves. It’s also fun to see what AI will “come up” with given a silly prompt.

Tedious code generation

AI can take away the tedious work of programming. Why spend tons of time writing code, trying to find code that does what you want, tweaking existing code, debugging, and whatever else when you can ask AI and get the answers immediately? People value their time, and saving time seems like a big win and less headache.

Making art that wouldn’t otherwise be possible

Many psychedelic rock groups have at least one video using AI. Here’s a famous example from King Gizzard:

Could humans have made something like this manually? Additionally, is the act of a human constantly adding input into the AI not still human work, just assisted by an AI tool, the same as any other software tool?

If I don’t, someone else will

A bit of a weak argument, but might as well include it. If we (that being a community, a country, etc.) don’t improve on, iterate on, and use AI, then others will do it better than us. Often thought of as an “AI arms race”.

The arguments (against)

AI produces “slop”

No matter the medium, AI produces “slop” which is low-effort content by the author (or not by the author) to get some work out there. It is generally full of flaws and unappealing on closer inspection. Often you can initially be tricked into thinking it isn’t AI.

Commercialized AI tools are becoming intrusive

Modern software is bloated with AI. AI this, AI that, etc. Stop giving me AI all the time.

AI generation uses a lot of energy and resources

The energy to both train AI models and to run inference is costly. It is damaging to the environment and not sustainable.

Training steals from the original creators

This applies to both art and code.

Art: Image generators are trained on mass amounts of public art. Bots scrape tons of images from art websites, often by independent creators, and use it to train their model. Attribution is never given to pages where images are trained on. Often times, images trained have watermarks in them which the image generators will then put in the final generated image. Creators are not trained.

Code: LLMs are trained on a lot of open source code. This code has different licensing and having AI generate code from it could possibly be breaking code licenses. Additionally, when you use this code, you are stealing from real human’s code without citing them. The AI can’t even tell you most of the time who wrote it. (In a recent example, AI did in fact know who wrote the code: [link])

Money spent goes to the wrong party

On a similar note, when you spend money for subscriptions like ChatGPT Plus/Pro or Gemini Pro or whatever they have now, the money goes to the companies running the software/hardware instead of the authors who made the original work that the AIs were trained on.

AI costs jobs

Arc Raiders recently had controversy over their TTS voices. For the game, they apparently paid voice actors to say a bunch of lines for the game with the intent that the voices would be used for TTS in game. This is so adding new lines or changing existing ones would be easier than calling the VAs back to do more lines. Note that the VAs reportedly knew this going in.

Those against believe that if we allow training on AI voices, companies will pay VAs once for their voices, then use their voices into the future without paying them ever again. Additionally, this cost-cutting could cause many low-quality character VO to appear in the future. (Again, this issue is a bit more complex because the alternative would be to either drop these lines altogether or to bring VAs back for every new bit of dialogue in the future.)

Outside of that specific example, work for artists in the entertainment industry are thought to lessen since AI could replace people and reduce the number of employees to hire, saving the company money at the expense of artists’ jobs.

AI art is not actual art

The AI does not understand what art is, it just generates images based on algorithms. Humans want to see real art, not art created by a machine. That’s sort of the point of art, to see the different viewpoints of different humans.

There are probably tons of more arguments for and against, but these are the big ones I can think of for now. I align with some of them and not with others. And not to say that I think some people’s opinions are wrong, but I don’t like when people ignore the nuances. Not everything is black and white. Just like many other controversial subjects, there is not necessarily a “right answer” you can point to and say “this is irrefutable”.

What I think

My problem with “AI is bad”, “art generated by AI isn’t real”, etc. is that we’re not attacking the real issue, just the argument directly. It shouldn’t matter if “AI art is real art or not”, but what does matter is what happens as a result of us using AI art often vs banning it. There are upsides and downsides to both, and the way it affects people depends on the person.

If AI art is allowed unrestricted for everyone in all marketplaces, then large AI companies benefit because they will have more users and therefore more money, non-artists will benefit because they can now “produce” art without having to learn to draw. Vice versa if AI art is completely banned. AI companies will have to move away from that product and non-artists will have to either do their own art or find and pay artists to do it for them. The artists now have to benefit because their jobs are now secure (for now, who knows what other abomination mankind can make in the future.)

Where I stand at is that AI should be used to assist people but not to do work for people. It should be fine to use AI to ask it a question if you’re having a hard time searching online for it, or to throw ideas at. But when you have to do something where it’s important the answer is correct (writing a report, writing important code, etc.) you should be doing it yourself using online sources. Of course, this is a bit hard now because you might indirectly be using AI through other’s work, but fundamentally you yourself are not using AI.

By this, I mean I disagree with using AI to do produce the entire product. That means I disagree with vibe coding and agree that it’s mostly harmful (ask me how I know). I also disagree with using AI images as a final product, either to put on art websites like DeviantArt or to use directly in the final product of some other media, like how recently Call of Duty Black Ops 7 used AI generated images directly as banners, despite having a large team of employees where a real perosn could have presumably done it instead.

The most important thing I think we value is the time and effort that went into something. When people see a work of some kind, whether that being a movie, a piece of physical/digital art, a video game, a TV show, an album, or a book and go “wow, a lot of love and effort was put into this.” AI content often doesn’t (or shouldn’t) give you that feeling. You often think instead, “wow, this person let a computer do all of the thinking with no effort of their own.” It’s not another accomplishment of the human race, it’s a random generator that someone kept rolling until it looked good. And I kind of agree with that.

As for model training, I don’t like the idea that models are constantly being trained on other’s work without permission, although there’s the argument the other way that humans are also trained on other’s work without permission and that’s how people learn different styles and learn to make our own style. Preferably, we wouldn’t be seeing AI generated images produce images almost identical to trained images, or code that produces a large block of code that looks almost identical to an existing piece of code.

I think that if we use models, we should try to at least make them as open as possible and respect those who don’t want to be a part of it. That means respecting site’s wishes with robots.txt, being transparent where data was trained from, and making building the model easy for anyone else to do. I’m not a huge fan of large companies making closed models and having models locked behind a paywall, even if it is expensive for them to train.

In a perfect world, a group of creators could allow their data to be trained on, with the catch that anyone uses the model would get paid. Obviously, this is a bit impossible given some creator’s work might be used more than others’, AI doesn’t really know whose work it’s pulling from, and no one would pay for models when they could use free models instead. Additionally, most creators would probably not agree with this anyway. And those who would would still be in controversy, see the Arc Raiders discussion above. Regardless, I feel like there should be a way to improve how things are in the training space rather than saying “there’s no problem with it” or “there’s no way to fix it”.

I personally do all AI work locally on my own machine with my own GPU. Even if the things I make are harmless and tame, I still don’t like to give all of the things I am doing to a big company. Sure, I use Discord which could — at any time — probably read any message in any of the chats I’m in. But I don’t necessarily put everything personal about myself and what I’m doing into Discord. Likewise, I don’t put everything I’m doing into ChatGPT and friends either. When it’s local, I can run the tool, see its results, keep or delete them, and move on knowing a big company isn’t mining my data and using it to train their models further for free.

Energy usage is also an interesting thing to bring up. Training obviously uses a lot of resources, but is running a local LLM on my own machine using any more energy than me using tilt brush in VR? Both are using a large amount of energy to run, but one is talked about in a much more negative light than the other. This could probably use some more research, but I wonder if, when doing this locally, this argument is a bit overblown.

Lastly, I like to think of AI as just another tool. All kinds of tools can be used incorrectly, and AI seems to be the worst offender. You can do all kinds of bad things with it, some even illegal like fraud and such. But you can also do some good things with it too. Maybe AI really can find actual bugs in your code, or touch up mistakes in a photo better than you could in photoshop. I think the problem is right now that no one is trained to handle what to do with AI output well enough, and to understand that AI is now part of the world and that the genie isn’t going back into the bottle.

Itch.io consensus

Getting back to the game development side now. I spent a while looking at itch.io threads, game jam rules, Reddit threads talking about itch.io, Steam discussions comparing itch.io and Steam AI disclosure rules, and talking to friends online and in person about what they thought.

Itch.io seems to be primarily on the side of non-AI, but to varying degrees. Many game jams disallow AI but don’t clarify what “disallowing AI” means. Some explicitly say “no AI at any part of the development process”, some say “no AI in the final product but using it for inspiration is fine.”

![]()

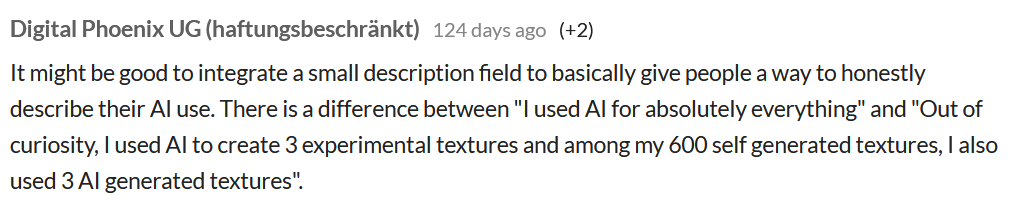

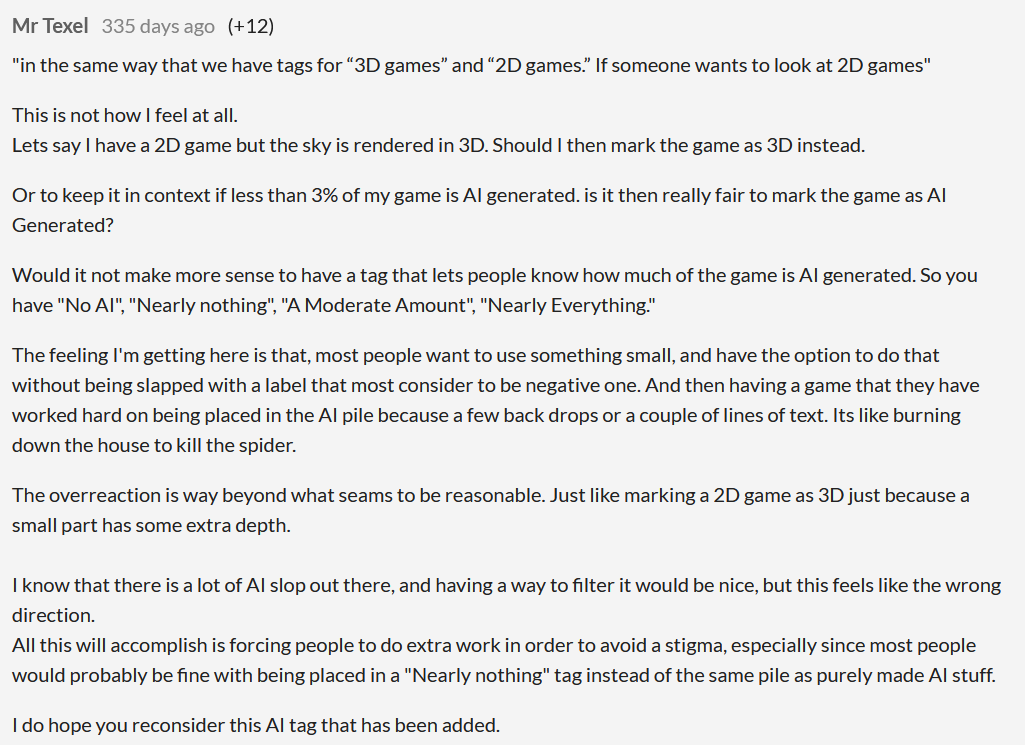

On the forums, those against AI are against it for different reasons. Some just want to filter out AI art, and some are so against the concept that AI in any part of the pipeline is enough to never look at your game (sort of like an AI vegan, although not to be confused with “AI veganism” which surprisingly has a Wikipedia article, [link].) Those for AI (at least, partially for AI) are annoyed at the lack of control over the tag and the fact that a game with little AI is clumped together with games with a lot of AI. Others suggest that if you can get away with lying about not using it, it probably isn’t enough AI usage to matter.

My take (and others’ take) is that there should be more fine-grained control. Ask more questions about the AI content. Was AI used during planning only or were assets generated for the game. Was a majority of the assets generated from AI, or just a few? Are the AI generated parts for there to be AI on purpose, to be satire, or to poke fun at it? Or was it just because the developer wanted to churn out five games in a single month with little effort? Additionally, a box (besides the game’s description) for the author to write their own explanation could be nice.

Reddit was roughly the same. Depending on the subreddit, you can find people very against AI and proudly proclaiming that they will “filter out AI as soon as the option becomes available.”

Others aren’t too sure. Some think some level of assistance is fine. Since it’s just about output and because you took the output of ChatGPT and made your own output with it plus your own thoughts, it doesn’t count as output from ChatGPT.

In-person discussions were mostly the same. People I asked believed that using AI for ideas and advice (even if bad advice) was okay, but using it directly in a game (art, dialogue, etc.) was probably not. AI coding opinions were mixed, with wide opinions from “video game code is already bad, we don’t need to make it worse” to “I don’t see a problem with that since the end user doesn’t care what the underlying code looks like”. Went asked if they thought my game at the current trajectory should be classified under AI, it was a bit mixed. Some said it probably doesn’t count as AI if it’s just using AI generated art for inspiration. Others said it’s probably best to tag it just in case.

No matter where I looked, it seemed no one had a good answer. People’s opinions were all over the place. What do we really want from this? We obviously don’t want AI slop but we also don’t want to prevent works from being passed up because they were filtered out, all because it used a tiny bit of AI work in it.

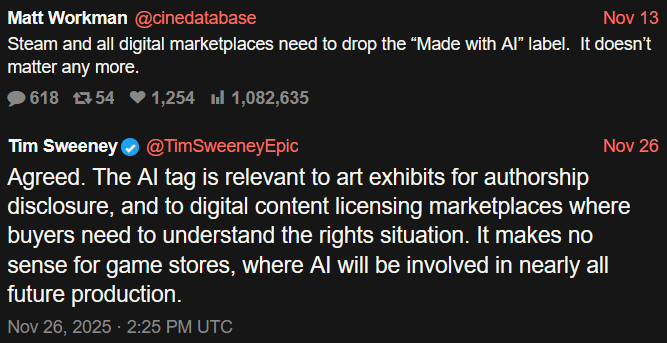

Something I forgot to ask about was about whether AI disclosure should exist in the first place, but I figure most of the people I asked would be for it. I think we’ve all seen enough AI slop to understand that we should have an easy way of telling what is and isn’t AI and have a way to filter it out. I just think it’s sweeping under the rug to say that all AI usage is the same. That being said, right before I post this, I found that Tim Sweeney (of Epic Games) had this to say about AI disclosure:

I don’t have anything to add to this that hasn’t already been said in this post, but I’m putting it here just to have it here. I obviously disagree with this statement, and it’s hard not to guess why he might feel this way given his position as the CEO of a large game company.

Conclusion

I think Blue from OSP said it best from a recent video [link]:

Conclusion: not my place to give a conclusion! I just think it’s real neat!

I took enough time out of multiple days to write about 4,500 words on this post, but honestly, I don’t really know how to feel about it all. Where do I stand on this? Where am I going with this project? I’ll have time to change my decision as the release date gets closer. Maybe I’ll choose to eliminate AI usage altogether. Maybe I’ll find an artist to help out. Who knows.

Still, what about the future? What are my opinions? I don’t care much about how this goes either way. Does AI gain a better sentiment across all fields? Do we find a more environmental and ethical way to use AI? Great! Do we end up creating laws to ban it? (This is unlikely, but let’s entertain the thought.) Fine, I’ll find a way in the world without it. It’s a thing that’s nice to have but not mandatory by any means. We lived without AI, we can continue to live without it still.

There’s a ton more adjacent subjects I wanted to talk about in this post such as “bad attribution” and what counts as “original works”, store bought stock content (stock images, Unity asset store, etc.), difference between artists and programmers, and more. But for now, I’ve edited them out and plan to put them in future posts instead.

Before I go, I want to point out a group of people who seem to be ignored often: the people making the AI itself and figuring out the algorithms and whatnot. I don’t mean the people who are training models on the internet but the researchers who figured out how to make a decent chatbot. Regardless of your feelings about it, the technology is very interesting and I hardly hear anything about the developers who actually make it happen, only about those who make a crappy product and raise millions of VC for it.

Okay that’s about it, dcn out.